Identifying AI-generated content with SynthID

Being able to identify AI-generated content is critical to promoting trust in information. While not a silver bullet for addressing problems such as misinformation or misattribution, SynthID is a suite of promising technical solutions to this pressing AI safety issue.

This toolkit is currently launched in beta and continues to evolve. It’s now being integrated into a growing range of products, helping empower people and organizations to responsibly work with AI-generated content.

SynthID embeds a digital watermark directly into AI-generated content, without compromising the original content.

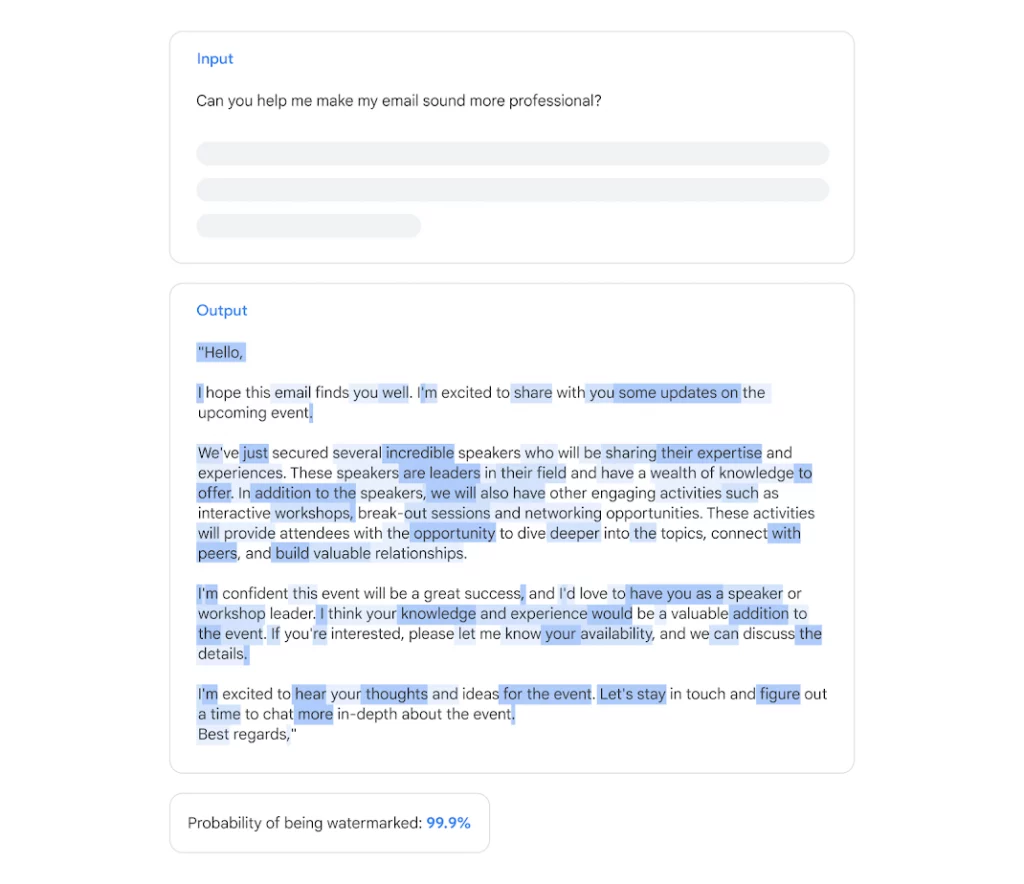

An LLM generates text one token at a time. These tokens can represent a single character, word or part of a phrase. To create a sequence of coherent text, the model predicts the next most likely token to generate. These predictions are based on the preceding words and the probability scores assigned to each potential token.

For example, with the phrase “My favorite tropical fruits are __.” The LLM might start completing the sentence with the tokens “mango,” “lychee,” “papaya,” or “durian,” and each token is given a probability score. When there’s a range of different tokens to choose from, SynthID can adjust the probability score of each predicted token, in cases where it won’t compromise the quality, accuracy and creativity of the output.

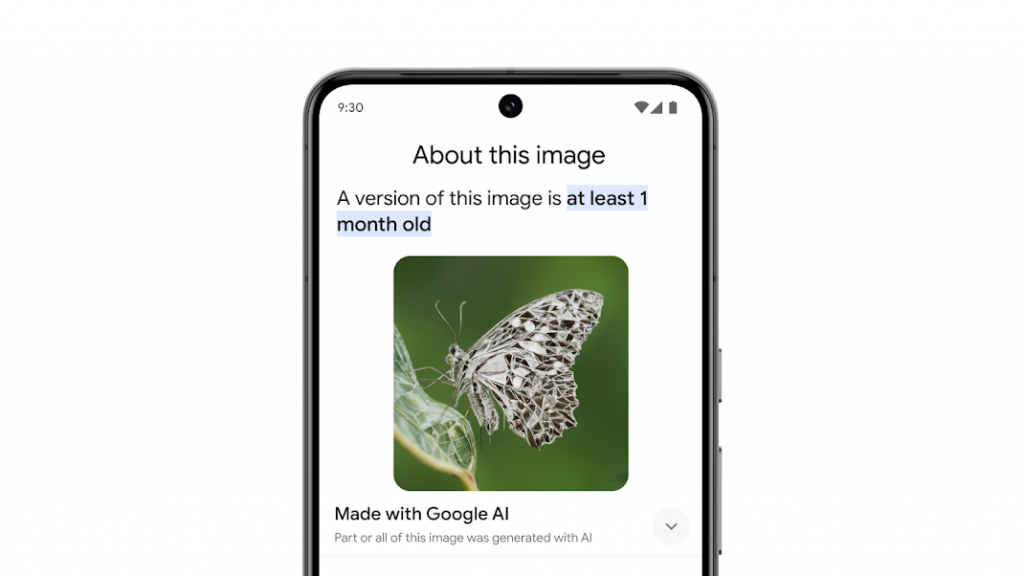

A piece of text generated by Gemini with the watermark highlighted in blue.

In October 2024, we published the SynthID text watermarking technology in a detailed research paper published in Nature. We also open-sourced it through the Google Responsible Generative AI Toolkit, which provides guidance and essential tools for creating safer AI applications. We have been working with Hugging Face to make the technology available on their platform, so developers can build with this technology and incorporate it into their models.

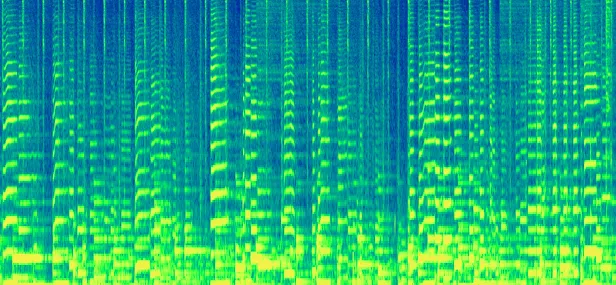

Once the spectrogram is computed, the digital watermark is added into it. Finally, the spectrogram is converted back to the waveform. During this conversion step, SynthID leverages audio properties to ensure that the watermark is inaudible to the human ear so that it doesn’t compromise the listening experience.

The watermark is robust to many common modifications such as noise additions, MP3 compression or speeding up and slowing down the track. SynthID can also scan the audio track to detect the presence of the watermark at different points to help determine if parts of it may have been generated by Lyria.

This technology is available to Vertex AI customers using our text-to-image models, Imagen 3 and Imagen 2, which create high-quality images in a wide variety of artistic styles. SynthID technology is also watermarking the image outputs on ImageFX. We’ve also integrated SynthID into Veo, our most capable video generation model to date, which is available to select creators on VideoFX.

SynthID can also scan a single image, or the individual frames of a video to detect digital watermarking. Users can identify if an image, or part of an image, was generated by Google’s AI tools through the About this image feature in Search or Chrome.

Our highest quality text-to-image model

Our highest quality text-to-image model

Our highest quality text-to-image model

Our highest quality text-to-image model